History, AI, and Non-Consumption: Part I, Winter is Coming

From Ancient Dreams to Modern Realities

“One age cannot be completely understood if all the others are not understood. The song of history can only be sung as a whole” – José Ortega y Gasset

Everyone is speed publishing, according to the AI Index report from stanford. Every year, the number of AI publications increases by the thousands as more researchers join the AI ranks. With all this progress and FOMO, it's easy to lose sight of the long, winding road that led us here, I mean, it didn’t happen overnight, ChatGPT didn’t happen overnight, Artificial General Intelligence (AGI) will not happen overnight, its foundation upon the foundation, it could take years, decades, even centuries sometimes!

This post takes a step back into the 1930s (and even before), 50s, 60s, 70s, and 80s, when most of the concepts behind what we know as AI today were forged. Here, we take a trip together down memory lane. In this post, we are going to explore the key events in history, meet key people who made it all happen, look into how they influenced AI, and how AI was posed as the ethos of computer science, the ultimate goal upon which all grounding concepts were built. We will also look at AI winters, why they happened, and where we are today.

Note: As I was writing this, I wrote way more sections than I should have, so I decided to break it down into two parts (it will not likely fit an E-mail anyway). This here is part I, in part II, we will compare and contrast history and present, look at AI and innovations in general from the business and economic perspective, and brainstorm on how to build better products catering to a larger group of consumers from the lessons learned in part I.

Before the 1930s

The journey towards artificial intelligence began long before the 1930s. Many early thinkers and inventors laid the groundwork for future AI advancements through a series of theoretical, philosophical, and mechanical innovations. Be it the Ancient Greek myths, such as those of Talos and Galatea, the mechanical men presented by Yan Shi to King Mu of Zhou in 10th century BC China, the logical groundwork for AI with concepts such as the syllogism and means-ends analysis (ALOT of details here). Even during the medieval period, the Banu Musa brothers created a programmable music automaton, and Al-Khawarizmi’s work in arithmetic and algebra introduced the term “algorithm” (9th century). Fast forward to the 1600s, with Schickard’s first calculating clock, Hobbes, and the mechanical theory of cognition by Hobbes (19th century). Onwards to the 1700s, Jonathan Swift described a knowledge-generating machine in "Gulliver's Travels, and the 1800s where Samuel Butler speculated on machine consciousness in "Darwin Among the Machines".

Photo from: https://zkm.de/en/automatische-hydraulische-orgel-der-banu-musa-ibn-shakir

These early milestones/inventions/philosophies showcase the longstanding fascination with creating intelligent machines, which set the stage for AI advancements in the 20th century, the century when modern AI was born.

The 1930s to 1950s

Alan Turing, considered as the father of theoretical computer science and AI, produced a foundational paper in 1936 on computable numbers which introduced the concept of the Turing machine, providing a theoretical framework for future computers. By 1941, Konrad Zuse had constructed the first working program-controlled general-purpose computer, and in 1948, Norbert Wiener coined the term "cybernetics," integrating the study of control systems and communication theory

In 1950, Alan Turing wrote his paper on COMPUTING MACHINERY AND INTELLIGENCE, asking the question, “Can machines think?” He explored and simplified the definition of “intelligence” with the goal of modeling it. Additionally, he proposed the imitation game, the Turing test, as a way to test and evaluate intelligence.

Note on the Turing Test: Today, we discuss Artificial General Intelligence (AGI) and how we get there. The Turing test is one approach to identifying that state. Some argue that the Turing test is not a practical measure of AI. It's easily gamed with simple tricks that exploit human biases to appear “human” rather than demonstrate genuine intelligence, which is arguably not the goal we have in mind. We need a more robust and meaningful assessment of AI capabilities.

In 1955, Newell, Simon & Shaw Developed the First Artificial Intelligence Program, the Logic Theorist, the first program designed to imitate human problem-solving skills. The program was created to prove theorems in propositional calculus similar to those in Principia Mathematica by Whitehead and Russell. In 1956, The program was showcased at the Dartmouth Summer Session on Artificial Intelligence in 1956, marking a key moment in the history of AI research.

The Dartmouth Summer Research Project of 1956 is often taken as the event that initiated AI as a research discipline ~ Artificial Intelligence (AI) Coined at Dartmouth

The Dartmouth conference brought together many of the field's great minds in one place and also formed the basis for future research and innovations in the field. In the next section, we will discuss the first artificial intelligence white paper, one of the outcomes/artifacts of this gathering of researchers and scientists.

In 1957, John McCarthy (also one of the founding fathers of AI, mainly as he coined the phrase “artificial intelligence”) introduced LISP, short for, List Processing) which later became the favored programming language for AI.

Before we finish the 1950s, I’d like to highlight one of the very first demos of AI. If you have been around, you have probably seen “The mother of all demos'' by Douglas Engelbart in the 60s, demonstrating the computer mouse as a UX interface, but have you seen Claude Shannon’s demo on Theseus, the AI mouse? If not, you should! Theus is probably one of the first manifestations of applied “intelligence”. Shannon’s goal was optimizing telephones and relays but this remains a great token of how scientists at the time approached the problem.

The Dartmouth Workshop | 1956

In 1955, McCarthy, then a mathematics professor at Dartmouth College, formally proposed a summer workshop to the Rockefeller Foundation. The proposal, titled "A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence" outlined the workshop's goals and marked the first official use of the term "artificial intelligence"

With funding secured from the Rockefeller Foundation, the workshop was held in the summer of 1956 on the Dartmouth College campus. While the original plan envisioned a two-month collaborative effort, the workshop ultimately unfolded as a more loosely structured gathering, with participants coming and going throughout the summer.

The Dartmouth workshop was attended by the founders of AI, other key figures were invited to the Dartmouth Workshop as well. Among them were future Nobel prize winners John F. Nash Jr. (1928–2015) and Herbert A. Simon (1916–2001).

A Bold Conjecture: Machines That Learn and Think

During the Dartmouth workshop, a proposal for a summer research project was presented. Here is a quote from the proposal:

We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it ~ from A proposal for the Dartmouth Summer Research Project on Artificial Intelligence

This quote encapsulated the core ambition, the vision, of AI research at the time, which can be boiled down to creating machines capable of learning, reasoning, and problem-solving on par with humans.

During the workshop, scientists explored a wide range of topics, most of which form the basis and the core of what we know as AI. It's all there since the 50s 🙂. Amongst those topics, I find two to be quite important:

Neural Nets: Early researchers in the field, like Marvin Minsky, delved into the idea that artificial neural nets (networks today) could simulate the brain's learning processes.

“It may be speculated that a large part of human thought consists of manipulating words according to rules of reasoning and rules of conjecture.”

“How can a set of (hypothetical) neurons be arranged so as to form concepts? Considerable theoretical and experimental work has been done on this problem by Uttley, Rashevsky and his group, Farley and Clark, Pitts and McCulloch, Minsky, Rochester and Holland, and others. Partial results have been obtained, but the problem needs more theoretical work.”

Self-Improvement: An ambitious goal at the time was the concept of machines capable of self-improvement. The idea from the paper hinted at the potential for AI systems to enhance their own capabilities continuously. Are we here yet in 2024? I don’t think so, we have self-supervised learning (see my earlier post on pretraining and fine-tuning for more details) but not self-improvement as a broader concept that encompasses more than just learning from data during training/initial phases, it includes any changes and AI might make to improve its performance, capabilities, or efficiency, potentially including but not limited to learning processes. Is the AI self-aware enough?

Not Enough Algorithm-ability

“The major obstacle is not lack of machine capacity, but our inability to write programs taking full advantage of what we have.”

This quote reminds me alot of where we are today, we have tons of computers, and we did make breakthroughs with transformers, but arguably we might be hitting scaling laws here. After GPT-4, we are in catch-up mode, but who knows, time will tell. I still think we need to spend more time doing efficiency optimizations and not just throw computers at the problem. Anyway, let’s get back to the founders!

Founding Fathers & Key Figures

From: https://www.linkedin.com/pulse/dartmouth-conference-1956-its-lasting-influence-back-arthur-wetzel/

The Dartmouth workshop brought together some of the most influential figures in the early history of AI:

Claude Shannon

A pioneer in information theory, Shannon's work laid the foundation for understanding how information is transmitted and processed, both in machines and in biological systems. Remember Theseus, the AI mouse 🙂?

During my college years, we studied Shanon’s Entropy and information theory. I have since been fascinated by the simplicity of the approach, which I personally think applies not only to senders/receivers between machines in an electrical communication system but also to humans.

We humans communicate via language. Verbally, we have accents, which are noise to the receiver. Sometimes, we are even lazy to say the whole thing or lazy to provide context, which is, again, noise. We tend to rely on repeating what we say or changing how we formulate it to improve the quality of the knowledge we communicate, that’s the loop 🙂. Actually, Shannon’s theory can be applied in many areas, even in biology!

Marvin Minsky

Minsky's research on neural networks and cognitive science shaped the AI field for decades to come. What’s fascinating is that Minsky had built his prototype of the neural network using mechanical components, he called it the SNARC machine, a machine “made out of about 400 vacuum tubes and a couple of hundred relays and a bicycle chain” for simulating learning by nerve nets. The machine he built simulated rats running in a maze and the circuit was reinforced each time the simulated rat reached the goal (kinda like Theseus, it could only do one thing, solve the maze).

“A feedback loop reinforced correct choices by increasing the probability that the computer would make them again—a more complicated version of Shannon’s method, and a level closer to how our minds really work. Eventually, the rat learned the maze.”

Conceptually this is very close to how we do it today, with optimizers and error functions! In many ways, Minsky had a “technical” vision of how things would work.

Photo from: https://www.the-scientist.com/machine--learning--1951-65792

Nathaniel Rochester

An early advocate (also an IBMer) for using computers to solve problems traditionally done by humans, Rochester helped build the first AI programs. In 1956, he published a classic article in which he and colleagues simulated a network of neurons on IBM 701 and 704 calculators. See Nathaniel Rochester III (1919-2001) - Dharmendra S. Modha

John McCarthy

The organizer of the Dartmouth workshop, McCarthy coined the term "artificial intelligence" (which earned him the top spot amongst the founding fathers of AI) and played a crucial role in defining the field's research agenda. “McCarthy has worked on a number of questions connected with the mathematical nature of the thought process, including the theory of Turing machines, the speed of computers, the relation of a brain model to its environment, and the use of languages by machines”

McCarthy also designed the LISP programming language that was heavily pitched for use with AI, and what inspired LISP machines (the specialized AI computer).

The Legacy Stays

The ideas, collaborations, and research directions that emerged from this gathering laid the groundwork for all of the progress that has been made in the field over the past six decades. Those in attendance, namely Marvin Minsky, Herb Simon, Allen Newell, and John McCarthy founded the three leading AI and computer science programs in the U.S.: MIT, Stanford, and Carnegie Mellon.

The workshop's legacy continues to this day.

The 1960s

The 1960s was an eventful decade for AI, with a shift towards knowledge-based and expert systems and early experiments in natural language processing. Leading this charge was Edward Feigenbaum, a student of Herbert Simon, a pioneer in decision theory and cognitive science.

Ed Feigenbaum and the Birth of Expert Systems

Feigenbaum's work with Herbert Simon sparked his interest in creating machines that could mimic human expertise. Inspired by Simon's theories on bounded rationality and human problem-solving, Feigenbaum and Alan Newell embarked on a quest to build "thinking machines” after Herbert Simon handed them the manual for the IBM 701 vacuum tube computer, this was their first commercial binary computer experience.

“Taking the manual home, reading it, by the morning, I was born again.” ~ Ed Feigenbaum's Search for A.I.

This quest led to the publication of "Computers and Thought" in 1963, a collection of articles on artificial intelligence that quickly became a bestseller. This was followed by the development of EPAM (Elementary Perceiver and Memorizer), a computer model that simulated human learning and memory.

Building on these foundations, Feigenbaum and his team created Dendral (1965), the first expert system. Dendral used knowledge of chemistry and heuristic search to identify organic molecules from mass spectrometry data, effectively automating scientific inference in a specialized domain (this reminded me of DeepMind’s AlphaFold 🙂).

ELIZA: The 1966 AI that beat GPT-3.5!

In 1964, Joseph Weizenbaum created ELIZA, an early natural language processing program that simulated a Rogerian psychotherapist. Though simple in design, ELIZA's ability to hold seemingly meaningful conversations surprised many, even prompting some users to believe they were interacting with a real person. It’s surprising to me that in 1964 we had the same interfaces we have now (chatting with intelligence). I’d say Eliza is probably the closest to ChatGPT conceptually, but the tech, the intelligence itself was rule-based, it was creative but still followed a deterministic log. That said, it still beat GPT 3.5 in the Turing test!

We evaluated GPT-4 in a public online Turing test. The best-performing GPT-4 prompt passed in 49.7% of games, outperforming ELIZA (22%) and GPT-3.5 (20%), but falling short of the baseline set by human participants (66%) from [2310.20216] Does GPT-4 pass the Turing test?

Here is the link if you want to chat with Eliza: Eliza, Computer Therapist, also below is classic video showing the demo 🙂

The 1960s laid the groundwork for much of what we see in AI today. The focus on knowledge representation, heuristic search, and early natural language processing set the stage for the development of expert systems, machine learning, and, eventually, the large language models that power today's conversational AI.

The 1970s

In the 1970s, AI research centered around the idea that "computational intelligence," as Edward Feigenbaum put it, was the "Manifest Destiny of computer science."

Refining Expert Systems: From Dendral to Meta-Dendral

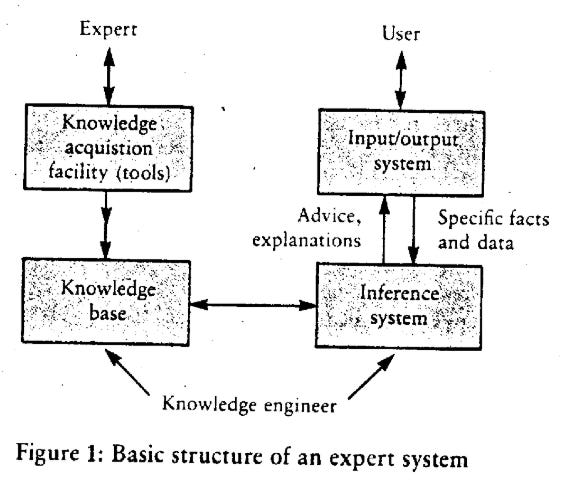

Building on the success of Dendral, researchers developed Meta-Dendral (1975), a system that could automatically generate rules for mass spectrometry from vast datasets. The Dendral project also highlighted the importance of extracting, structuring, and representing expert knowledge in a way that computers could understand aka knowledge engineering. This involved collaborating with domain experts, codifying their knowledge, and creating inference engines to apply that knowledge to new problems.

“Knowledge engineers elicit the knowledge from the minds of human experts, shape the knowledge so that programmers can transform it into viable program codes (knowledge base), and create the inference system that uses a knowledge base to derive specific results.“ ~ (PDF) The Fifth Generation

If you think about it, this is not very different from the goals we have today for AI agents, RAGs, and fine-tuned LLMs.

Picture from: (PDF) The Fifth Generation

The LISP Machine: Pioneering AI Hardware

With the architecture and the design of expert systems ready, hardware capable of doing the job was needed to take it to the next step. John McCathy had developed LISP in the 50s, and the community at the time found it suitable for running expert systems because of its ability to do symbolic manipulation, a key aspect of AI research. The question was, what hardware would be optimized down to the instruction set to execute LISP programs? Please welcome the LISP machine. Those machines even came with IDEs optimized for running/debugging LISP.

Many notable expert systems at the time were developed and deployed on LISP machines, including MYCIN (the medical expert), XCON (the digital equipment expert), and R1 (the sales assistant). The 70s were the rise of LISP machines.

Early Network Effects for AI and Expert Systems

The 70s also saw the rise of ARPANET, the precursor to the internet. This enabled researchers to share knowledge and collaborate on AI projects across different institutions and disciplines. For instance, Feigenbaum's team at Stanford granted access to their AI tools via ARPANET, attracting hundreds of users from fields like biology and pharmaceuticals. Actually, they exposed the only non-DARPA machine at the time on the ARPANET. This added some network effects to AI research back then (but not enough to prevent winter from coming, keep on reading 🙂).

Scaling Even More

The Heuristic Programming Project at Stanford, led by Feigenbaum, aimed to scale up AI research by harnessing the talent of numerous graduate students (early open source 🙂?). This effort resulted in the creation of the "Handbook of Artificial Intelligence" a three-volume encyclopedia that became a standard reference for AI researchers worldwide. Mostly used for teaching and aligning researchers and post-grads on state-of-the-art.

The 1980s

In 1981, Japan launched the Fifth Generation Computer Systems project, a 1 billion dollar project (a lot at the time), aiming to develop powerful computers with AI capabilities like natural language understanding and machine learning. The four generations of computing that existed:

the first generation being diodes and vacuum tubes

the second generation is transistor-based

third are integrated circuits

fourth generation using microprocessors

“Parallel processing will replace the one-step-at-a time procedures of the standard yon Neumann architecture of today's computers; jobs will be divvied up so that many subjobs can be in the works simultaneously” from the fifth generation paper

Finally, the fifth generation, which is viewed as whatever is able to run artificial intelligence. Arguably, the Japanese stressed the fact that parallel processing is needed, which makes you think about GPUs but also quantum computers.

"Whoever establishes superiority in knowledge and technology will control the balance of world power regulating both cost and the availability of knowledge" ~ McCorduck and Feigenbaum from the fifth generation paper

“The Japanese expect that use will be tremendously expanded if people can communicate with the system in natural language such as Japanese, English or Swahili” from fifth generation paper

The Japanese did have the foresight indeed, Natural language as a UX did wonders and “tremendously expanded” the use of AI (look at ChatGPT 🙂).

Finally, another quote to show you how close the thinking was to where we are today:

“Are your plants unaccountably turning yellow? Have you found that even your broker can’t keep up with the latest money market options? Are you afraid of doctors and lawyers or too poor to call them in when you need them? Expert systems will be on call day or night, providing instant, specific solutions to your problems.”

Toward the end of the paper, the author asks Ed Feigenbaum the question about the ethical and Legal ramifications of expert systems. The answer was "No," he said, there will always be "a human in the loop" taking full responsibility. Expert systems are designed to aid decision-makers, not replace them.

Despite the potential, AI researchers recognized the limitations of expert systems (we will talk about AI Winters in the coming sections). These systems struggled to learn independently, lacked common sense reasoning, and were unable to replicate the intuitive and creative aspects of human thought. Nevertheless, the work of the 1970s and the 1980s laid a crucial foundation for the AI breakthroughs that would follow.

Winter is Here!

The science kept coming, and the research did not stop, but the application was nowhere near. Working as a researcher myself for years, I know it can get very interesting to just keep digging more and more without a clear path of viability. If I can publish a paper at a well-known conference and contribute my learnings, I win, or that’s how I thought back then.

After the Dartmouth summer proposal for AI research, many optimistic predictions were made, especially those ignited by the promise of groundbreaking applications. Throughout the 1960s, AI research received significant funding from DARPA, with few requirements for delivering tangible results.

First AI Winter

More AI research and writing, for example, the “Perceptrons” book by Marvin Minsky and Seymour Papert or the checker’s player by Arthur Samuel, but little commercial application or use for them. In 1973, Sir Jamel Lighthill, a professor, and an applied mathematician, published a report that critically assessed AI research, he was supportive of the idea and applications of AI for industrial automation, but had concerns about the research trying to meld it with the analysis of brain function. It’s said that this report strongly led to funding cuts in the UK and raised doubts about the field's potential globally, including the U.S. Which might have led to the first “AI Winter”, a quiet period for AI research and development.

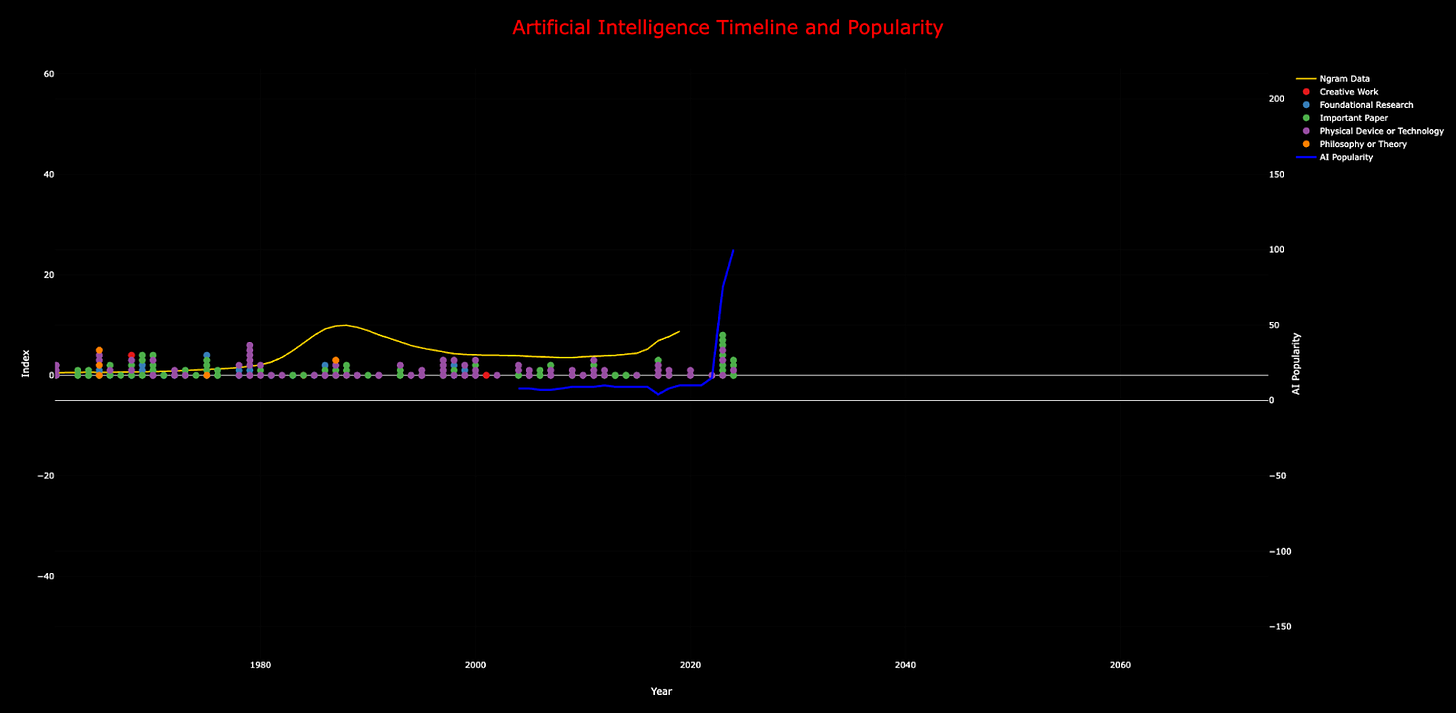

I did a bit of digging to see if research and development were really affected, and whether this period of time deserved to be referenced as a “Winter”. It didn’t seem that there was a stop to content being generated be it research or otherwise, on the contrary, there has been a steady increase in the amount of “events” (see the circles) related to AI AND the amount of content generated for AI, i.e., AI research was more active than ever during the first “AI Winter”.

I’d rather consider the first AI winter, a clash of expectations, but as an early field, people (especially the scientific community) knew there was still progress to be made. I.e., it didn’t create an economic bubble burst but rather instilled some doubts which did impact viability for commercial use or delayed it.

1980-1987: Renewed AI Excitement

The 1980s ignited interest in AI after the skepticism in the 70s, this was mainly driven by the emergence of expert systems, Ed Feigenbaum even published a book about the applications of expert systems, highlighting case studies from many companies, including IBM, FMC, Toyota, American Express, and more. This optimism and references to commercial applications attracted increased funding, leading to a period of renewed excitement in the field. Also, as you can see from the graph below, the AI curve and Ngram mentions reached their peak!

1987-1994: The Real “AI Winter”

The enthusiasm for expert systems in the 80s was short-lived as their limitations became apparent. These systems struggled to handle novel information and situations that fell outside their pre-programmed knowledge base (they were IF/ELSE rule-based). Additionally, the collapse of the market for Lisp machines, a key platform for the development of expert systems. Here some of the reasons:

Compared to LISP, there were more versatile and cheaper programming languages/technologies like C++ and RISC computers which made specialized LISP machines (requiring a custom TTL processor) less appealing.

The existing Lisp Machine software was not flexible or user-friendly enough for the average developer (don’t we always have this problem even today 🙂).

In addition to the above, in 1987, DARPA's decision to cut AI funding again, coupled with the failure of the Japanese Fifth Generation Computer project in 1991, deepened the second AI winter, increased doubts, and might have led to a burst in the AI economic bubble.

> Note: I find the timing of starting Nvidia (1993) interesting, just a bit after the decommissioning of the fifth-generation project, which initially had aimed to optimize computing for AI applications using a form of parallel processing. Didn’t dig much here, but the correlation could not escape me. Not sure if Jensen Huang had that far ahead foresight.

The combination of shifting market demands from LISP machines/expert systems, management and financial struggles, technological limitations, and competition from more versatile and cheaper technologies are considered reasons behind the “AI Winter.” Below, you can see the trend going down after 1987, and there were also fewer publications and writings (see the circles).

More readings on AI winters here.

1994-Present: Steady State & Boom!

Despite the downturn faced in the 1980s with the AI winter, AI research kept beating, and interest in the research community didn’t suffer as much. This is another way to say that AI stayed in the “lab” but didn’t abandon the world after the realization that more work was needed to produce better ROI for enterprises, at least enough to justify spending on skill/hardware to make it happen. Let’s see what happened by looking at the timeline:

So we can see that AI content peaked in the 80s, AI winter started, and less content was produced. But research and progress kept on. Of the noticeable periods in AI history is the moment when IBM’s Deep Blue defeats Garry Kasparov, the world’s chess champion at the time, marking an end to mono-task models of intelligence, which was AND still a goal for AI research. Noticeably, the late 1990s towards 2010, most of AI development happened in robotics, domestic robots, etc.

https://twitter.com/olimpiuurcan/status/1524249481512103937

2009 onwards saw numerous manifestations of AI in areas such as autonomous vehicles, AI ethics and governance, quantum computing research, and the integration of AI into various industries, including healthcare, finance, and entertainment. Notably, Google introduced the self-driving car project in 2009. In the 2011, IBM introduced Watson, which defeated human champion Jeopardy (Historically, it seems like IBM knows how to win with AI, be it Deep Blue or Watson).

In addition to self-driving cars, robotics, and game-playing AI, deep learning with neural networks started to flourish around this time span. AlexNet, a deep neural network won the imageNet large scale visual recognition challenge which sparked interest in deep learning and neural networks.

In 2015, Google DeepMind developed AlphaGo, which defeated Lee Sedol, the world champion of Go at the time. In 2016, Generative Adversarial Networks (GANs) were introduced, which started to reshape the generation of very realistic images and videos.

In 2017 Researchers began to apply reinforcement learning to a range of tasks, from playing video games to robotic control, demonstrating machines' ability to learn complex strategies, and the publication of the papers that introduced attention with transformers. The following year, 2018, Google introduced BERT (Bidirectional Encoder Representations from Transformers), making a dent in optimizing context awareness in natural language processing and paving the way for future applications (Mr. ChatGPT).

From 2019 to 2024, AI witnessed major milestones. OpenAI's GPT-2, GPT-3, and GPT-4 (also Claude, Gemini, etc.) showcased language models' power to generate human-like text (also raising questions/concerns about ethical AI use). Noteworthy is that in 2020, AI became a very useful tool in combating the COVID-19 pandemic, even aiding in prediction and vaccine development. DeepMind's Alpha Fold 2 solved the long-standing protein folding problem the following year, potentially revolutionizing biology and medicine. Very recently, Google announced Alpha Fold 3, which aids in accurately predicting the structure of proteins, DNA, RNA, ligands and more, and how they interact. We hope it will transform our understanding of the biological world and drug discovery.

I’d say the 2020s is finally the decade of AI viability for commercial application at a large scale, the growth trend of AI is rising exponentially with no signs of stopping That said, we have seen a similar trend in the early 1980s, followed by rapid fall in interest by the mid 1980s (so we have a couple more years to break the record 🙂), see the figure below:

I personally see no sign of this stopping or decaying now, but I could see the overpromise of AGI, setting the wrong expectations might lead to dampening the growth trend, after all, humans continuously raise their expectations when exposed to better experiences, better things, and expectations are hard to reverse, so we should be careful what and how we communicate to avoid an economic bubble burst!

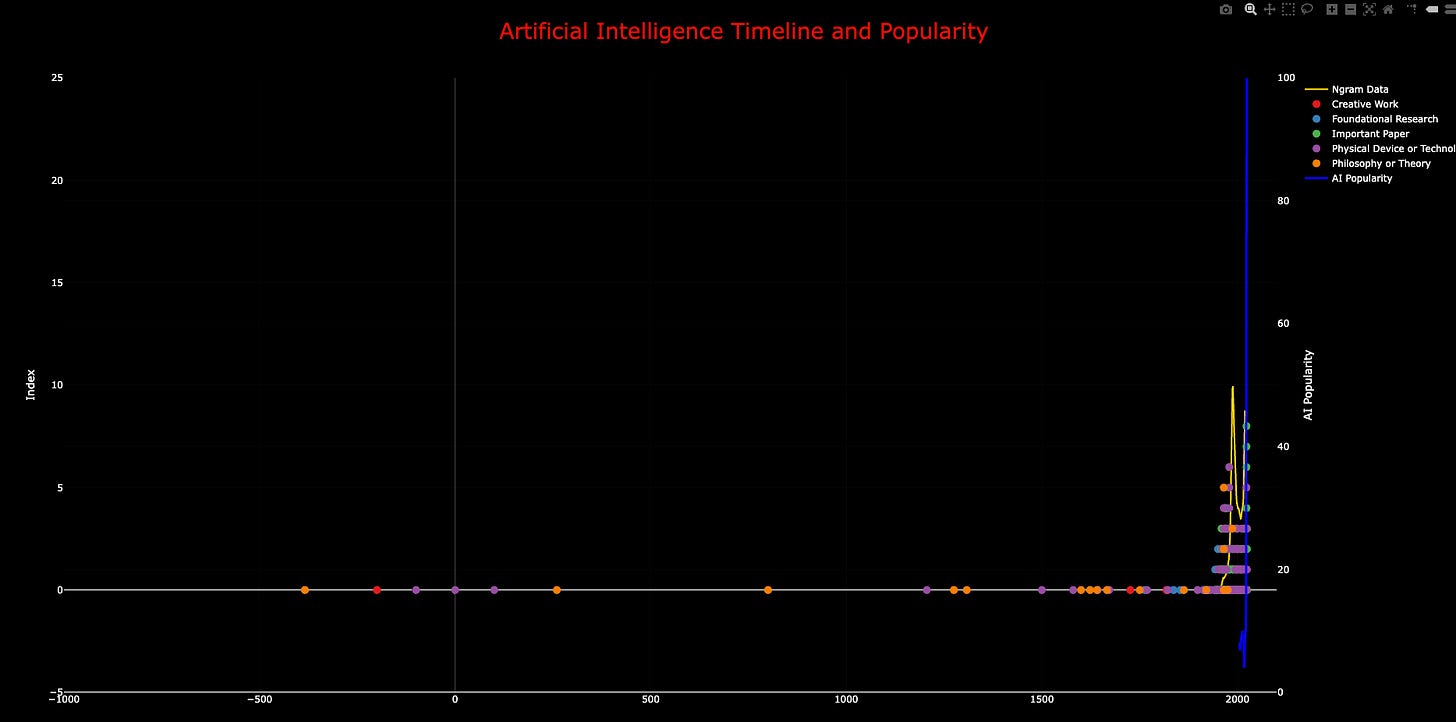

Below is another view of the AI trend for the 2000s:

AI History Visualization

Before we part ways, I’ll point you to the visual I used throughout this post to point you to the AI timeline.

You can find the interactive visual here and the code here (feel free to contribute :))

Next post, we will explore the lessons we learned from the history of AI in this post, and muse on how we can apply them to inform how we build successful innovations, products, and businesses. Stay tuned!

That’s it! If you want to collaborate, co-write, or chat, reach out via subscriber chat or simply on LinkedIn. I look forward to hearing from you!