The AI Cybersecurity Market: Navigating Opportunities and Risks

Understanding the key trends and opportunities.

The intersection of artificial intelligence (AI) and cybersecurity is rapidly evolving, creating both opportunities and challenges. AI is transforming the cybersecurity landscape, offering new approaches to combat sophisticated cyber threats. But with this power comes great responsibility. This post delves into the dynamic AI cybersecurity market, exploring the key trends, opportunities, and risks that organizations must navigate to build a secure and resilient digital future.

This convergence is happening amidst growth in the global AI market, projected to reach $826 billion by 2030 from $196.63 billion in 2023 [Markets & Markets]. Within this growing market, the AI cybersecurity portion of it is an expanding segment, anticipated to reach $134 billion by 2030 from $24.3 billion in 2023 [Statista].

Below is an executive summary.

Key Drivers:

Generative AI Surge: Generative AI is rapidly gaining traction, impacting various functions within organizations and fueling demand for AI-powered applications. [Gartner Forecast Analysis]

Widespread Adoption: A large majority (84%) of organizations leverage AI-based cybersecurity tools, with 75% focusing on network security [World Economic Forum, Statista].

Generative AI Spending Surge: Expenditure on generative AI solutions is expected to skyrocket, accounting for 35% of total AI spending by 2027, up from a mere 8% in 2023 [Gartner].

Booming Market: The AI cybersecurity market is poised for exponential growth, projected to reach a staggering $134 billion by 2030, reflecting a dramatic increase from its 2023 valuation of $24.3 billion [Statista].

Rapid CAGR: This translates to a remarkable compound annual growth rate (CAGR) of 22.3% from 2023 to 2033 [Market.us], driven by escalating cyber threats, widespread cloud adoption, and the demand for proactive and scalable security solutions.

AI Software Spending Boom: Global spending on AI software is projected to reach $297.9 billion by 2027, with generative AI software expenditure increasing significantly. [Gartner Forecast Analysis]

Expansion of the Attack Surface: Cloud, growth in the number of IoT devices has expanded the attack surface, creating new vulnerabilities that require AI-driven security measures. [Market.us]

Growing Awareness and Investment: Organizations are increasingly recognizing the importance of AI in cybersecurity, leading to increased investments in research, development, and deployment of AI-powered security tools. [World Economic Forum]

Cybersecurity Talent Gap: The cybersecurity industry faces a significant talent shortage, highlighting the need for AI-powered solutions to automate tasks and augment human expertise. [World Economic Forum]

Regulatory Landscape: Cybersecurity and privacy regulations are becoming increasingly stringent, influencing the development and adoption of AI-powered security solutions. [World Economic Forum]

Cybersecurity Talent Shortage: The cybersecurity industry faces a significant talent gap, making it difficult for organizations to effectively manage security operations. AI can help bridge this gap by automating tasks, providing insights, and augmenting human expertise. [World Economic Forum]

Collaborations on Trustworth AI: focus on advancing safe, secure, and trustworthy AI, aligning regulatory frameworks, promoting ethical AI research, and broadening cooperation in AI protection and cybersecurity. [UAE and US Collaboration on AI ]

The U.S. and the UAE reaffirm their commitment to advancing safe, secure, and trustworthy artificial intelligence (AI) technologies.

Key principles include fostering international AI frameworks, aligning regulatory frameworks, and promoting ethical AI research and development.

The collaboration will focus on advancing safe, secure, and trustworthy AI, aligning regulatory frameworks, promoting ethical AI research, and broadening cooperation in AI protection and cybersecurity.

President Biden and President H.H. Sheikh Mohamed bin Zayed Al Nahyan will oversee the development of the memorandum of understanding.

The U.S. and the UAE are committed to deepening collaboration in AI and related technologies for a more prosperous and secure future.

Key Trends

Integration of AI into Enterprise Applications: Over 70% of independent software vendors are expected to integrate generative AI capabilities into their enterprise applications by 2026. [Gartner Forecast Analysis]

AI Adoption Phases: Organizations are at various stages of AI adoption, with many still in the experimentation or planning phases. [Gartner Forecast Analysis]

Generative AI's Impact on Security: While generative AI offers benefits for content creation and automation, it also presents new security risks, such as the generation of malicious code and sophisticated phishing attacks. Organizations must adapt their security strategies to address these emerging threats. [CBH]

Explainable AI for Enhanced Trust: The lack of transparency in some AI algorithms can hinder trust and adoption. The development of explainable AI, which provides insights into how AI systems make decisions, is crucial for building confidence in AI-driven security solutions. [Gartner Forecast Analysis]

Regulatory Landscape: Evolving cybersecurity and privacy regulations, such as GDPR and CCPA, are influencing the development and deployment of AI-powered security solutions, emphasizing data protection and responsible AI practices. [World Economic Forum]

Opportunities:

Development of Advanced Threat Detection and Response Solutions: AI can significantly enhance threat detection accuracy, accelerate incident response times, and minimize the impact of cyberattacks.

Proactive Security Measures: AI enables predictive analytics and threat intelligence, allowing organizations to identify and address potential vulnerabilities before they are exploited proactively. [Markets & Markets]

Automated Security Operations: AI can automate routine security tasks, freeing up security professionals to focus on more complex and strategic initiatives. [Gartner Forecast Analysis]

Enhanced Security for Cloud and IoT Environments: AI-powered solutions can address the unique security challenges posed by cloud computing and the growing number of connected devices. [Market.us

Challenges:

Ethical Concerns and Bias: AI algorithms can be biased, raising ethical concerns regarding fairness and accountability. [CBH]

Adversarial AI: Attackers can leverage AI to develop sophisticated attacks, posing new challenges for cybersecurity professionals.

Data Privacy and Security: AI systems rely on vast amounts of data, raising concerns about data privacy and security. [CBH]

Explainability and Transparency: Lack of transparency in AI algorithms can hinder trust and adoption [Gartner Forecast Analysis]

Conclusion:

The AI security market is experiencing rapid evolution, driven by the need for solutions to counter rising cyber threats. With power comes great responsibility though, while AI offers potential for enhancing security, organizations must also address the risks associated with its malicious use.

Enhancing Security: AI offers significant potential for bolstering cybersecurity defenses, with organizations increasingly employing it for:

Automating routine tasks: Freeing up human analysts for more complex threats.

Real-time threat detection: Enabling rapid response and mitigation.

Predictive analytics: Proactively identifying and addressing vulnerabilities.

Emerging Risks: However, AI also presents new challenges as adversaries exploit it for malicious purposes:

Phishing attacks.

Automated malware development.

Related musing here:

Going in Details

AI Market Statista

Reference: Artificial Intelligence - Global | Statista Market Forecast

Important Technologies Impacting Market Growth:

Machine Learning: Forms the foundation of the market and exhibits consistent growth throughout the period.

Autonomous & Sensor Technology: Shows steady growth, gaining momentum towards the latter half of the projection period.

Natural Language Processing: Experiences significant growth, becoming a major contributor to the overall market size by 2030.

Computer Vision: Demonstrates strong and consistent growth, becoming the second largest segment by 2030.

AI Robotics: While starting with a smaller market size, it exhibits rapid growth, becoming a prominent segment by 2030.

Key Observations:

The overall AI market is projected to experience high growth, reaching nearly 800 billion USD by 2030.

Machine Learning remains a dominant segment, while Computer Vision and AI Robotics emerge as important growth drivers.

Natural Language Processing also shows notable growth, increasing its importance in various applications.

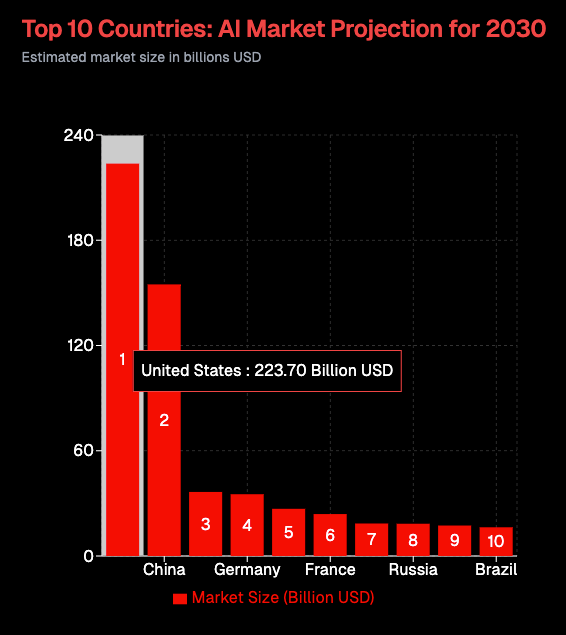

As for the top 10 countries, this bar chart depicts the estimated market size of the AI market in the top 10 countries for 2030.

United States: Dominates the market with a projected size of 223.7 billion USD, significantly larger than any other country. The US remains the clear leader in the AI market, driven by factors like technological advancements, research and development investments, and a thriving tech ecosystem.

China: Ranks second with a market size significantly smaller than the US but considerably larger than the remaining countries.it can be considered a strong contender, though with export controls

Germany, France, Russia, Brazil, etc.: Exhibit relatively smaller market sizes than the US and China.

Gartner Forecast Analysis

Reference: Forecast Analysis: AI Software Market by Vertical Industry, 2023-2027

AI software spending will grow to $297.9 billion by 2027. Over the next five years, market growth will accelerate from 17.8% to 20.4% in 2027, with a 19.1% CAGR. Government has the largest spend of over $70 million by 2027, but oil and gas is growing fastest with a 25.2% CAGR.

McKinsey: GenAI Adoption Spikes

Reference: The state of AI in early 2024: Gen AI adoption spikes and starts to generate value

Gen AI Adoption Surge: 65% of organizations regularly use gen AI, a significant increase from the previous year.

Common Use Cases: Gen AI adoption is most prevalent in marketing, sales, product development, and IT, with an average of two functions utilizing it.

Overall AI Adoption Growth: 72% of organizations now utilize AI, up from 50% previously, with increased adoption across various industries.

Increased AI Investments: Organizations are allocating budgets for both gen AI and analytical AI, expecting further investment growth over the next three years.

Gen AI Risks: 44% of respondents reported negative consequences from gen AI, including inaccuracy, cybersecurity concerns, and explainability issues, highlighting the need for risk mitigation.

High-Performer Success: Organizations effectively leveraging gen AI attribute a significant portion of their EBIT to its deployment, often utilizing it across multiple functions and implementing risk best practices.

High-Performer Challenges: These organizations also face challenges with data governance, integration, and operating models, emphasizing the importance of addressing these for successful gen AI adoption.

Diverse Research Sample: The research involved a diverse group of participants across various regions, industries, company sizes, and functional specialties, providing valuable insights into the current AI landscape.

StackOverflow Developer Survey

Reference: AI | 2024 Stack Overflow Developer Survey

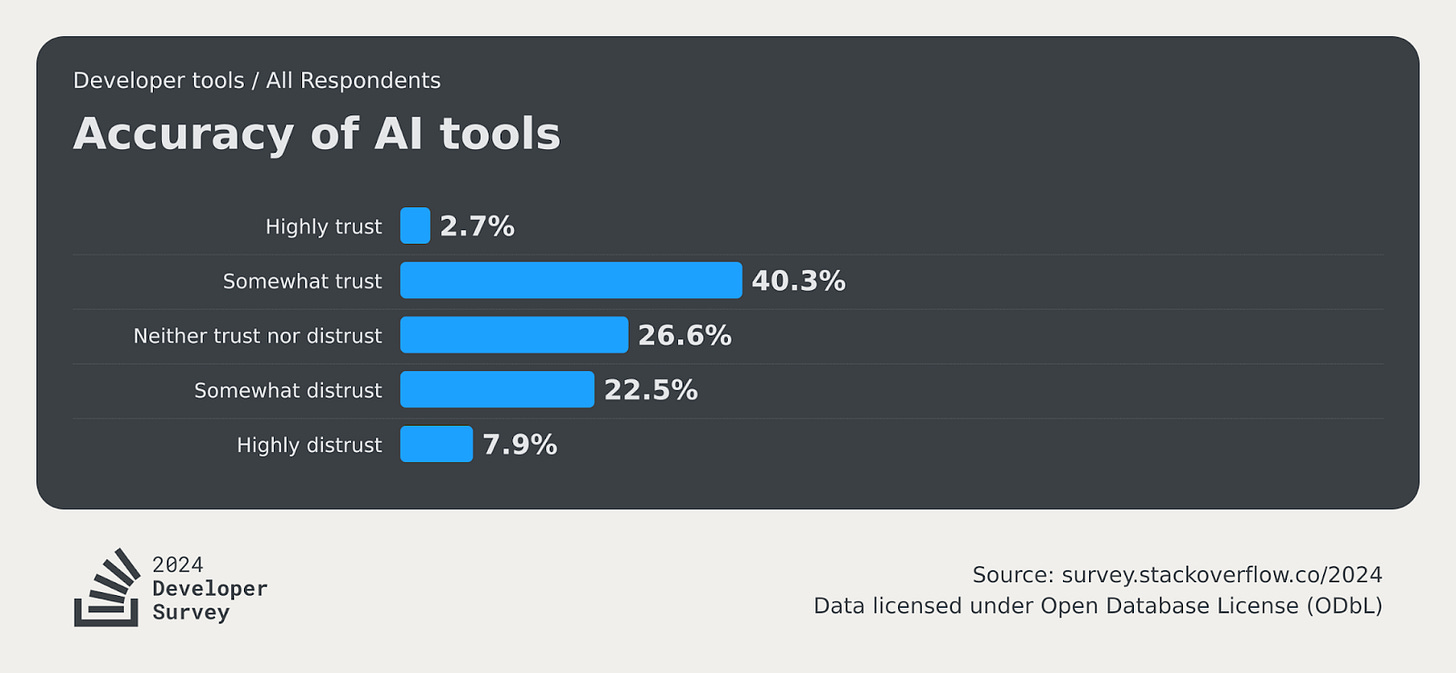

Similar to last year, developers remain split on whether they trust AI output: 43% feel good about AI accuracy and 31% are skeptical. Developers learning to code are trusting AI accuracy more than their professional counterparts (49% vs. 42%).

Professional developers agree the issue is not user error: twice as many professionals cite lack of trust or understanding the codebase as the top challenge of AI tools compared to proper training.

Market.us

Reference: AI In Cybersecurity Market Size, Share | CAGR of 22.3%

The Global AI In Cybersecurity Market size is expected to be worth around USD 163.0 Billion by 2033, from USD 22 Billion in 2023, growing at a CAGR of 22.3% during the forecast period from 2024 to 2033.

AI in cybersecurity refers to the application of artificial intelligence technologies in detecting, preventing, and mitigating cyber threats and attacks. It involves using machine learning, natural language processing, and other AI techniques to analyze vast amounts of data, identify patterns, and detect anomalies that may indicate potential security breaches or malicious activities.

The AI in cybersecurity market is rapidly growing as organizations recognize the need for advanced solutions to combat the increasing complexity and sophistication of cyber threats. AI technologies can enhance traditional cybersecurity measures by providing real-time threat detection, automated incident response, and predictive analytics to proactively identify and address potential vulnerabilities.

AI in cybersecurity encompasses a range of applications, including malware detection, network intrusion detection, user behavior analytics, and threat intelligence. By leveraging AI algorithms, cybersecurity systems can continuously learn from new data and adapt to evolving threats, improving their accuracy and effectiveness in detecting and mitigating security incidents.

The market for AI in cybersecurity is driven by factors such as the growing frequency and severity of cyberattacks, the prevalence of cloud, IoT in the past decade, and the need for scalable and intelligent security solutions. Vendors and emerging startups are investing in AI capabilities to develop innovative products and services that address the evolving threat landscape.

According to a report by Security Intelligence, the average total cost of a data breach in 2022 rose to ~$4.35 million, representing a modest increase of 2.6% compared to the previous year’s average of ~$4.24 million. This upward trend highlights the growing financial impact that cyberattacks can have on organizations worldwide.

European Union Agency for Cybersecurity (ENISA) observed a noteworthy surge in the adoption of AI-based security solutions, with a remarkable 30% increase over the past year. This surge can be attributed to organizations’ proactive efforts to bolster their cyber resilience and protect sensitive data from sophisticated attacks.

World Economic Forum

The World Economic Forum’s “Global Cybersecurity Outlook 2023” (see World Economic Forum's Global Cybersecurity Outlook 2023) report revealed that an impressive 84% of surveyed organizations are leveraging AI-based tools to bolster their cybersecurity capabilities. These organizations recognize the potential of artificial intelligence in fortifying their defenses and mitigating risks associated with cyber threats.

Cybersecurity Perception & Prioritization:

95% of business executives and 93% of cyber executives agree that cyber resilience is integrated into their enterprise risk-management strategies.

Only 36% of organizational leaders who meet at least monthly on cybersecurity feel confident in their organization's cyber resilience.

90% of respondents are concerned about the cyber resilience of third parties who have direct connections to or process their organization's data.

Only 25% of respondents stated that their most senior cybersecurity executive reports directly to the CEO.

56% of cyber leaders meet with business leaders monthly or more often to discuss cybersecurity.

Cybersecurity Talent Gap:

59% of business leaders and 64% of cyber leaders ranked talent recruitment and retention as a key challenge.

Less than half of respondents reported having the people and skills needed today to respond to cyberattacks.

Impact of Regulations

73% of respondents agree that cyber and privacy regulations effectively reduce their organization’s cyber risks, a significant increase from the previous year.

76% of business leaders and 70% of cyber leaders agree that stronger regulation enforcement would increase their organization's cyber resilience.

Markets & Markets

The AI in Cybersecurity market report outlines a technology roadmap from 2023 to 2028, breaking anticipated advancements into short-term, mid-term, and long-term phases.

Short-term Roadmap (2023-2025)

Automating Incident Response: AI will be increasingly utilized to automate routine incident response tasks, reducing response times and minimizing manual errors.

Integration with Threat Intelligence: AI-driven threat intelligence platforms will be integrated with security tools, providing contextual awareness to enhance detection capabilities.

Explainable AI Adoption: The incorporation of explainable AI will improve transparency and enable a better understanding of threat detection mechanisms, helping security teams trust and verify AI decisions.

Mid-term Roadmap (2025-2028)

Intelligent Security Systems: Combining AI, cognitive computing, and automation will result in systems that can reason, learn, and autonomously make decisions, transforming cybersecurity operations.

Federated Machine Learning Models: The use of federated learning models will rise, allowing organizations to collaboratively enhance threat intelligence without compromising data privacy.

Long-term Roadmap (2029-2030)

Human-AI Collaboration in Security Operations: AI and human operators will collaborate seamlessly, leveraging each other’s strengths to optimize decision-making and threat response.

Quantum-Resistant Encryption: AI will be integrated with quantum-resistant encryption protocols to secure sensitive data against emerging threats.

AI and Blockchain Collaboration: AI systems will work with blockchain technology to safeguard data transactions, ensuring data integrity and security.

Market Overview

AI Cybersecurity Market

Market Size: Valued at approximately $22.4 billion in 2023.

Growth Projection: Expected to grow at a Compound Annual Growth Rate (CAGR) of 20.8%, reaching around $147.5 billion by 2033.

Overall AI Market

Market Size: Estimated at $196.63 billion in 2023.

Growth Projection: Projected to grow to approximately $826 billion by 2030.

Market Comparison

The AI cybersecurity market constitutes a substantial segment of the broader AI market, although smaller in size. In 2023, it represented about 11.4% of the overall AI market, with the potential to increase to 18% by 2030. Both markets are witnessing rapid expansion, driven by AI's growing role in safeguarding digital assets and the increasing importance of cybersecurity in an interconnected world.

CBH

Reference: How to Craft a Proactive Generative AI Strategy To Manage Cybersecurity Risks

35% of global companies are currently using some form of artificial intelligence (AI) in their operations, with 42% exploring AI integration, as per an Exploding Topics analysis.

Generative AI and LLMs are beneficial for content creation but poses risks such as publication of misleading content, data breaches, bias perpetuation, and legal/ethical violations. Risks involves establishing governance programs, implementing defense in depth, and ensuring model security through techniques like input data validation and anomaly detection.

The National Institute of Standards and Technology (NIST) has developed the Artificial Intelligence Risk Management Framework (AI RMF) to help businesses mitigate AI risks. See: NIST AIRC - Playbook

Characteristics of a trustworthy (a better umbrella here is responsible AI) generative AI system include safety, reliability, secure data handling, explainability, and fairness in training data.

Organizations should continuously evaluate and enhance their security measures to adapt to the evolving AI landscape and regulatory frameworks.

Use-cases and technological trends

Sovereign AI

Sovereign AI: What it is, and 6 ways states are building it | World Economic Forum

Security & Control: Maintaining complete control over data, algorithms, and infrastructure to mitigate risks of foreign interference, data breaches, and misuse of AI.

Secure Data Centers: Physically secure facilities with high-performance computing capabilities for data processing and storage.

Data Management Platform: Tools for data acquisition, cleaning, transformation, governance, and secure storage (cloud, data lakes, etc.).

AI and Data Usage Policies: Establishing processes for reviewing the use of data in AI training and fine-tuning. Establishing processes for reviewing proposed use cases for AI. Publishing and enforcing these decisions through technical and non-technical means.

Supply Chain Security for AI: Generating and using AI BOM and provenance data. Signing and verifying AI models.

AI Development Platform:

ML Frameworks & Tools: Providing access to leading frameworks (TensorFlow, PyTorch) and development tools for building, testing, deploying, and monitoring AI models.

AI Hardware: Investing in or securing access to specialized hardware like GPUs and TPUs to accelerate AI training and inference.

Internal Development Platforms: Enhancing existing IDPs to give developers access to the AI hardware they need, generate supply chain security artifacts for AI, and enforce AI security and compliance policies; all through automation rather than paperwork.

Software & Applications:

Vertical AI Solutions: Developing AI applications tailored to specific national priorities, such as healthcare, finance, defense, etc.

Core AI Technologies: Building expertise and tools in key areas like NLP, computer vision, and robotics.

Ethical Considerations: Ensuring the responsible and ethical development of AI, addressing bias, transparency, and societal impact.

Real stories backing up the use-case

AI Technology Trends

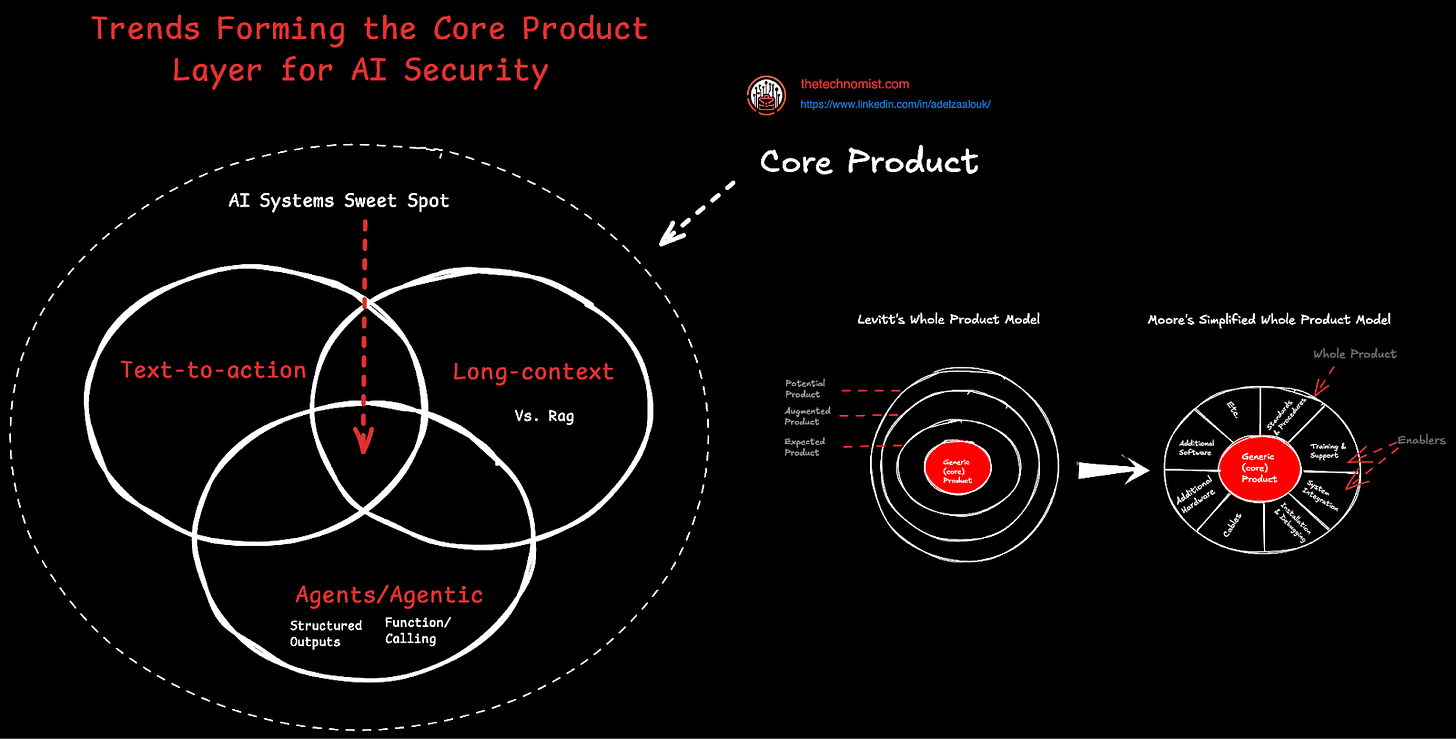

In a previous article, we explored the idea of compound AI systems as key building blocks in the making of whole products. We discussed agents/agentic, RAG, and other enabling technologies. These technologies emerged from trends through which the next generation of AI products will be made of, including those for AI security. These trends/technologies will be used to amplify a system's security but are also systems that need to be secured. For example:

Long-context reasoning: By leveraging long-context models, AI security systems can analyze vast amounts of data over extended time periods, identifying patterns and anomalies that may indicate emerging threats. This can be important for detecting sophisticated attacks that may involve slow, subtle manipulations over time. For instance, detecting insider threats that gradually escalate privileges or analyzing long-term trends in network traffic to identify subtle deviations indicative of a compromise.

Text-to-action: Combining long-context reasoning with text-to-action capabilities allows AI security systems to not only identify threats but also automatically generate and execute appropriate responses. For example, an AI system could analyze a complex security incident report, identify the root cause, and automatically initiate actions like isolating infected devices, patching vulnerabilities, or alerting relevant personnel.

Agents/Agentic will enhance AI security by enabling proactive and autonomous threat detection and response. These AI agents can continuously monitor systems, analyze data, and adapt to evolving threats, automatically triggering remediation actions to neutralize attacks. They can also conduct proactive threat hunting, automate investigations, personalize security policies based on user behavior and context, and improve collaboration between AI systems and human analysts. Ultimately, agents/agentic technology empowers organizations to build more robust and resilient security systems that can effectively combat the ever-growing complexity of cyber threats.

These new AI trends/technologies can be integrated into security products to enhance their effectiveness. For example, integrating agent-based threat detection into a SIEM (Security Information and Event Management) system can improve its ability to identify and respond to threats in real-time. Similarly, incorporating long-context reasoning into vulnerability management tools can enable predictive analysis and proactive patching.

Summary

The AI cybersecurity market is well positioned for growth, driven by the increasing sophistication of cyber threats and the widespread adoption of AI-powered security solutions. While AI offers the potential for enhancing cybersecurity, organizations must also address the ethical concerns and risks associated with its use.

Key Takeaways:

Rapid Market Growth: The AI cybersecurity market is expected to reach $134 billion by 2030, with a CAGR of 22.3%.

Generative AI is a Key Driver: Spending on generative AI solutions is rising, and it's being rapidly integrated into enterprise applications.

AI Enhances Security: AI enables proactive threat detection, automated security operations, and enhanced security for cloud and IoT environments.

Emerging Risks: The malicious use of AI, including phishing attacks and malware development, presents new challenges.

Responsible AI is important: Addressing ethical concerns, bias, and data privacy is essential for the responsible development and deployment of AI in cybersecurity.

Looking Ahead:

Organizations must proactively manage the risks associated with AI while harnessing its potential to strengthen cybersecurity defenses.

To ensure the safe and ethical development of AI for cybersecurity, continued collaboration between governments, industry, and researchers is needed.

Trends like long-context reasoning, text-to-action capabilities, and the development of autonomous AI agents will shape the future of AI in cybersecurity.

By navigating the opportunities and challenges responsibly, organizations can leverage AI to build a more secure and resilient digital future.

That’s it! If you want to collaborate, co-write, or chat, reach out via subscriber chat or simply on LinkedIn. I look forward to hearing from you!